NLP essentials for LLM¶

In this first chapter, you will learn about some fundamental aspects of NLP with large scale neural networks. We will focus on the transformer architecture to cover:

- the core components of this neural net structure and how it addresses some challenges of text processing

- a short historical review on the research and industrialization efforts for LLMs

- some major open models from 2020's

- a review f major NLP tasks that can be tackled with such models.

Understanding transformers architecture¶

The transformer architecture to neural network was introduced in 2017 by Google Brain research team in the now very famous paper "Attention is all you need" footnote:[Vaswani, Ashish; Shazeer, Noam; Parmar, Niki; Uszkoreit, Jakob; Jones, Llion; Gomez, Aidan N.; Kaiser, Lukasz; Polosukhin, Illia (2017-06-12). "Attention Is All You Need". https://arxiv.org/abs/1706.03762]. One of the original motivation of the work was to allow efficient processing of long text sequences. It proved to be a reliable approach that led to many improvements in text processing in the following years. To the point where it is today the dominating architecture for neural nets.

In order to better understand why and how this architecture has been so transformative, one should take a quick step back. Artificial Neural Networks (or ANNs) are very powerful computing devices. The universal approximation theorem footnote:[ https://en.wikipedia.org/wiki/Universal_approximation_theorem] support the idea that they can be used to represent a very large variety of functions given appropriately fixing their internal weights. This has been exploited in many domains with various success. However applying ANNs to text has proven to be complex due to the inherent sequential nature of such data.

Processing text sequences with neural networks¶

Characters sequence to sequence of vectors: encoding¶

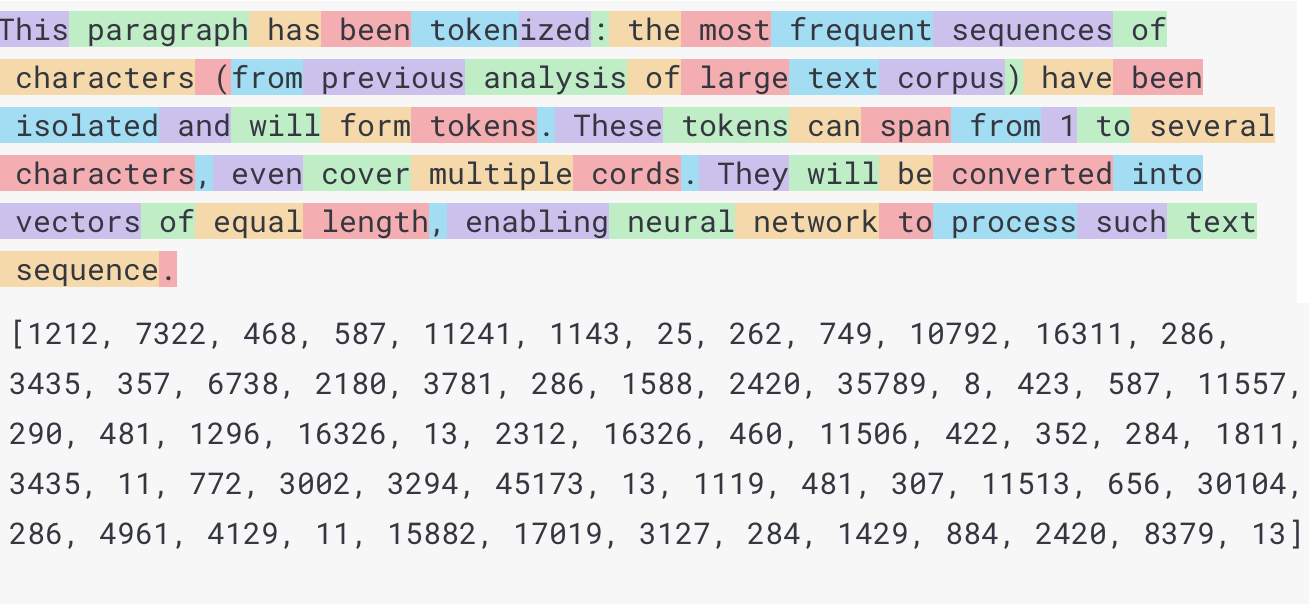

Text by itself can be seen as a sequence of characters. This sequence is supported by a character set with all variants (including accentuation and more generally all diacritics), various punctuation marks and a reading direction, but this does not change its fundamental sequential nature. To process it with ANNs an encoding step allows to convert it to a sequence of vectors. Characters being a very low level structure, words (or part of words) are used as the atomic element to be mapped to a vector to provide a higher level understanding of the text. This process transforms the sequence of characters into a sequence of tokens that is then encoded into a sequence one-hot vectors as shown in the [following figure](#tokenization-and encoding-of-text-sequence).

Words have traditionally been the unit of choice for such linguistic analysis because they are easily recognizable and have a clear boundary. They also often have a specific meaning and can be used to analyze a language's grammar and syntax. However, there are problems with using words as the only unit of analysis. One issue is that word boundaries can be ambiguous, especially in languages with few spaces between words. Additionally, some words can have multiple meanings depending on the context in which they are used, making it difficult to accurately analyze their usage. Finally, using words as the atomic representation unit for a text sequence means that one should define a priori the complete vocabulary of a language. This creates multiple challenges as an exhaustive language vocabulary is often large. For instance in moderne Chinese, using 3500 characters is sufficient to cover 99% of day-to-day vocabulary which comprise approximately 100 thousands words. However the estimate goes way beyond 50 thousands characters and more 350 thousands words if we count more advanced text from ancient literature to recent technical writing. Moreover, words are borrowed across languages and even new words build with new technics, cultural events or trends. This makes the maintenance of such exhaustive vocabulary very complex..

In recent years, there has been a shift towards using more generic tokens, such as subwords or characters, using a more brute force statistical analysis of characters sequences. This is because these smaller units can capture more information about a language's structure, can be less affected by context and can ba easily reused to construct rare words. Additionally, the use of subwords and characters can be useful in situations where the language is not well-known or does not have a clearly defined vocabulary, such as in social media text.

The set of tokens "known" by the system is similar to a vocabulary that define the "words" already seen. The issue of this representation is thus its sparsity: each token is represented by a one inside a zero filled vector that is as big as the vocabulary (usually from few thousands to 100 thousands for large multilingual models). This makes is very impractical for computations.

Sparse vector to dense vector: embedding¶

If the vector structure is a constraint for ANNs, they are however pretty efficient in handling the wide variability of real numbers. Thus densifying the one-hot encoded vectors used to represented text elements appeared as a solid technique to improve ANNs efficiency on textual data. The idea can be seen as a dimensionality reduction approach. The encode-decoder approach has become a classic to realize such conversion. Its concept and training approach are fairly simple as illustrated in figure <<encoder_decoder>>. It can be compared to a lossy compression algorithm where the weights of two mirror neural nets are trained to minimize the loss of information.

// TODO illustration of encode-decoder [id=encoder_decoder, reftext={chapter}.{counter:figure}] .Overview of the encoder decoder architecture. image::./images/ch01/autoencoder_schema.png[scale=40 width, align=center]

Training the weights is tedious but simple: it does not need any annotation on the text inputs and loss function is immediate. Once trained, the encoder layer can be used to make a fixed mapping between all known tokens from the vocabulary to their dense embedded vectors in lower dimension. The embedding of a sequence of text is en efficient mapping between the tokens and their embedding counterpart.

To sum up, prior to transformer architecture, these 3 elementary components were proposed to to 1) segment characters sequence in tokens; 2) encoding them in sparse vectors and 3) associate a vector representation (also called dense vector or simply embedding). All this to adapt the textual data representation to the constraints of ANNs.

Why did we need the transformer architecture?¶

Why the sequential nature of text is important? Fact is that words are a very powerful but also very flexible mean to represent information. The context — and thus the sequence — in which words are associated allows people to make sense of polysemous words — or, simply said, words with multiple interpretations and that can be understood in different ways depending on the surrounding sentence(s).

// TODO find one (or two) good examples of word sequences (not only in English) where polysemy is resolved by sequential context

To account for the sequence, Recurrent Neural Networks (or RNNs) and later Long-Short Term Memory (or LSTM) networks were introduced. These introduced one or more loops into the standard linear architecture of ANNs to "feed back" information on previous items when dealing with sequential data.

LSTM maintains an internal state build token by token: similarly to embedding vectors to represent tokens, LSTM internal state is a vector that see its value change step-by-step when processing each token of a sentence. So it needs to be computed sequentially following the input data structure. It creates issues for long sequences where the earlier part of a sequence can be diluted in the internal state and have less and less impact on the actual results. Long sequences with some important aspects at the beginning are thus difficult to processed: the important parts could be hidden or overwritten by the later parts. Moreover, this internal state prevents the computation to benefit from heavy parallelization power provided by Graphical Processing Unit (GPU). It makes LSTM slow to train on very large amount of data, limiting by design the overall model complexity.

.Batch processing

Batch processing is a method of processing a large amount of data by dividing it into smaller, manageable chunks called batches. The main advantages of batch processing is to make an efficient use of the very fast memory buffer that are close to the processing units (ie the CPUs) maximizing their use. This allows to process more data for the same time.

In the example below, assuming the use of a process() function of a piece of data, one can implement a simple loop consuming the data one-by-one or optimize the process in batch to allow for more efficient processing.

# Simple algorithm for classic processing in a loop

for i in range(0, len(data)):

process(data[i])

# Batch processing in a loop

batch_size = 50

for i in range(0, len(data), batch_size):

data_batch = data[i:i+batch_size]

process(data_batch)

NOTE: With the advances of GPU usage in data processing, the importance of having parallelizable has shown to be a crucial advantages for many learning algorithms. In some instances, using GPU allows to process data up to 100x faster or within the same amount of time to process up to 100x more data. This change in paradigm made some older approach (such as tha good old gradient descent for neural network) much more scalable and thus reached volume of data where the learning process leads to new results.

The transformer architecture was proposed to replace LSTM networks which are suffering from limited computing efficiency. It introduces three novel aspects to allow efficient "batch" processing of tokens and parallelize most of the computation.

What are the novel building blocks?¶

The transformer neural architecture combined three major specificities which have proven to be crucial in its performances:

- the self-attention mechanism addressing the long dependency problem that is central to processing sequence of text and making sense of concepts expressed.

- the positional encoding which allowed to overcome some limitations of the attention head pattern which was ignoring some aspects of the ordering in text.

- the layered architecture combining several encoder/decoder blocks, themselves composed with several attention heads which allowed to surcharge the modelling capabilities of the transformer model. In the following sections, we will go over these specificities in order to 1) understand better the rationale which drove their design and 2) explain the concepts and details of their implementation.

Self-attention mechanism¶

The self-attention mechanism, also known as the multi-head self-attention mechanism, is a way for the model to weigh the importance of different parts of the input when processing it.

It works by first linearly projecting the input into multiple different "heads", each of which learns to attend to different aspects of the input. This is done by applying a simple linear transformation to the input with different parameters for each head.

Q, K, V = linear(X)

Where X is the input, Q, K, V are the projected input, also called as queries, keys, and values respectively.

Next, for each head, the model calculates attention weights, which represent the importance of each position in the input when processing the current position. The attention weights are calculated by taking the dot product of the query (Q) and key (K) for each position, then dividing the dot product by the square root of the dimension of the key and scaling it by a softmax function to obtain the probability distribution.

The attention weight of a given position would be computed like this, with d_k being the dimension of the key

Attention = softmax(Q*K^T / √(d_k))

Alternatively, a multi-layer perceptron can be used to replace the dot product.

Finally, the model applies the attention weights to the projected input(V) to obtain the attended output, which is a weighted sum of the input. This final output is concatenated and projected back to original dimension by Linear layers.

The final output would be:

Output = Attention * V

This process allows the model to selectively focus on different parts of the input when processing each position, which improves its ability to model relationships between words in the input.

This attention mechanism allows the model to balance the relative importance of different parts of the input. This means that the model can selectively focus on different parts of the input when processing each position, which improves its ability to model dependencies between words in the input.

By itself this self attention mechanism offers a role that is similar to the internal state of RNNs. It is however particularly effective in this function, by allowing the model to:

- process input of any length, regardless of the specific length of the input. This is crucial for textual data where the input lengths vary and the semantic dependencies can go across long span of text.

- to learn complex relationships between words in the input whatever the distance in the sequence, which improves its ability to understand the meaning of the input.

If in theory the LSTM can also model this long relations, the sequential processing will always be more influenced by recent items than those at earlier step in the sequence. In comparison, the self-attention offer simultaneously a broader scope for modelling sequence relationship and a more balance impact of such relations. It enables to attend to any part of the input, regardless of the position of that input in the sequence. This means that the model can use information from any part of the input to make predictions about any other part of the input, which allows it to model more complex relationships and a much broader context across the input sequence. This has shown to be very crucial in understanding the meaning of long sequence.

Moreover, LSTMs are designed for sequential data and because of that, their sequential processing nature makes them less efficient than the transformer in the case of parallel processing. With the self-attention mechanism, the transformer can process the entire input in parallel and thus benefit greatly from specialized hardware.

All in all, it is a generic mechanism which makes the transformer architecture more versatile, efficient and adaptable to other tasks.

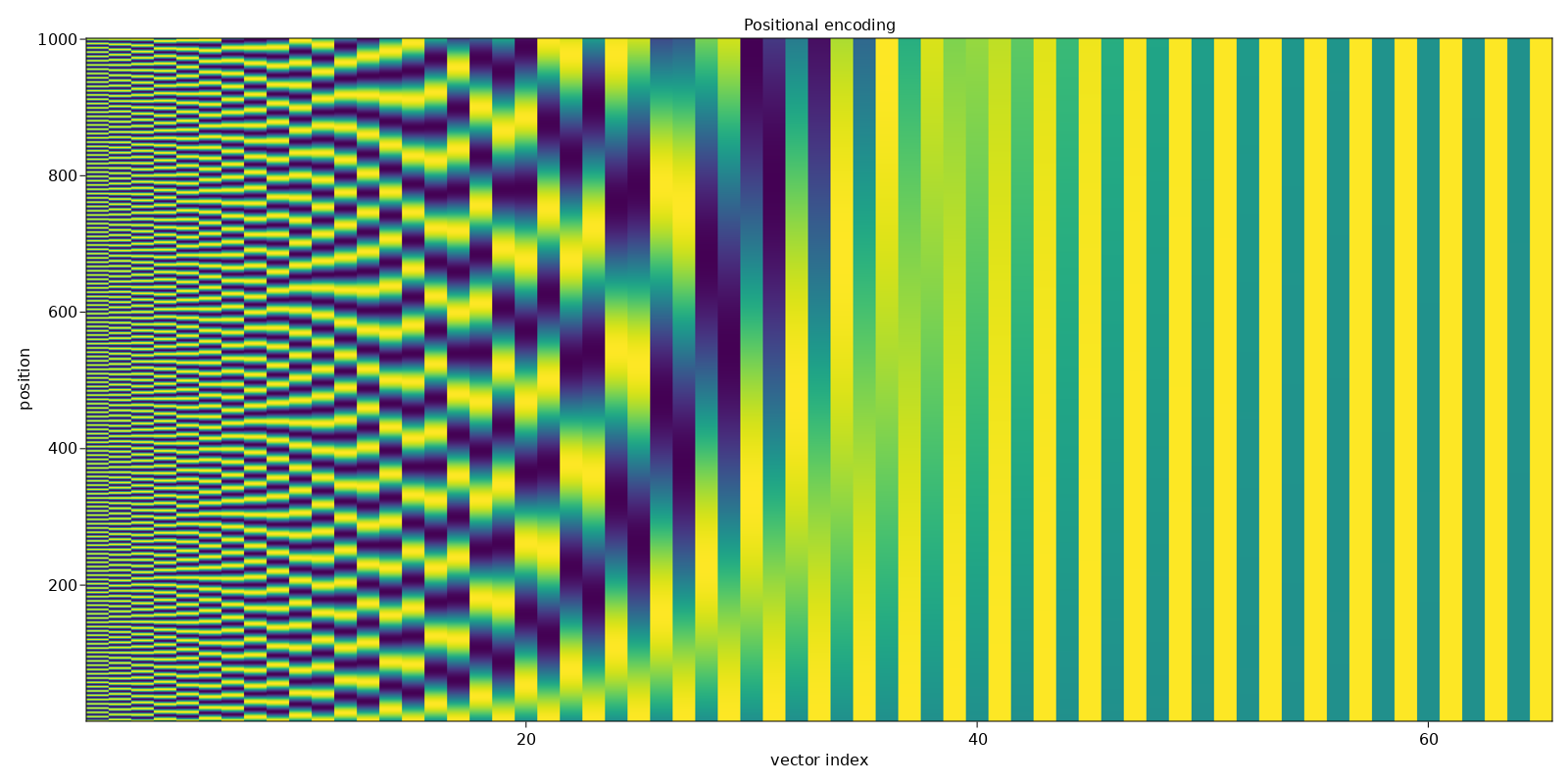

Positional encodings¶

Tokens order is a key aspects to interpret text data. Then the self-attention allows to weigh the importance of different tokens regardless of their position in the sequence. However, this also means that the model does not have any inherent understanding of the order of the input. To overcome this problem, the authors of the transformer architecture proposed to encode the position of tokens within their vector representation, keeping the fixed-length constraint on the vector

The positional encoding vector is typically generated by applying a mathematical function to the position of each element in the input sequence. A simplistic view is to assign a position number to each tokens of the sequence and then to mix the token's representation with this assigned number. In the real implementation, integer ordering number don't work well due to:

- the possibility that samples seen in training and prediction will have very different length (especially problematic when training sequences are short) and

- values will grow fast since many languages will contain sequences of more than 20 tokens. This will introduces a strong bias for the later words since the order label value will be high.

The transformer architecture uses a more elaborated solution where the position is encoded itself as a vector. Each dimension is valued from a sinusoidal function based on the token position and a specific frequency. The benefits of this encoding approach are multi-fold:

- the position encoding is unique and deterministic for every position

- the distance between 2 positions is consistent

- it can encode any position and is not limited to positions seen in training

The actual frequencies used have been experimentally determined for the better results: using increasing wavelengths for the sinusoidal functions, following a geometric progression from 2π to 10000 x 2π. This encoded position is then simply added to the embedding vector.

By having positional encoding as part of the input, the transformer architecture can now be trained on unordered data, and still understand the relationship between different input elements.

In summary, positional encoding provides the transformer architecture with the necessary information about the position of each element in the input sequence, enabling the model to understand the relative position of each element in the input and better model the relationship between input elements.

Stacking encoder/decoder¶

The last key piece of the transformer architecture is the composition of layers. At a macro level, the encoder and decoder are both composed of multiple layers of a similar structure, called a "stacking" encoder/decoder. The stacking encoder/decoder is essentially a sequence of layers, each of which is composed of a multi-head self-attention mechanism, followed by a position-wise fully connected feed-forward network (FFN).

After the multi-head attention layer, the position-wise fully connected FFN is a classic multi-layer perceptron (MLP). This network is applied independently to each position in the input and is used to learn non-linear interactions between the values obtained from the self-attention mechanism. Then a normalization layer - rightfully named after its role - normalizes the input in order to stabilize the training process.

To stack these layer sequence, the output of one layer is fed as input to the next layer, this process is repeated multiple times, with each layer learning to extract different types of information. The final output of the last layer is used as the final representation of the input. This repetition allows to learn multiple levels of representations of the input, improving its capacity to process more complex input data.

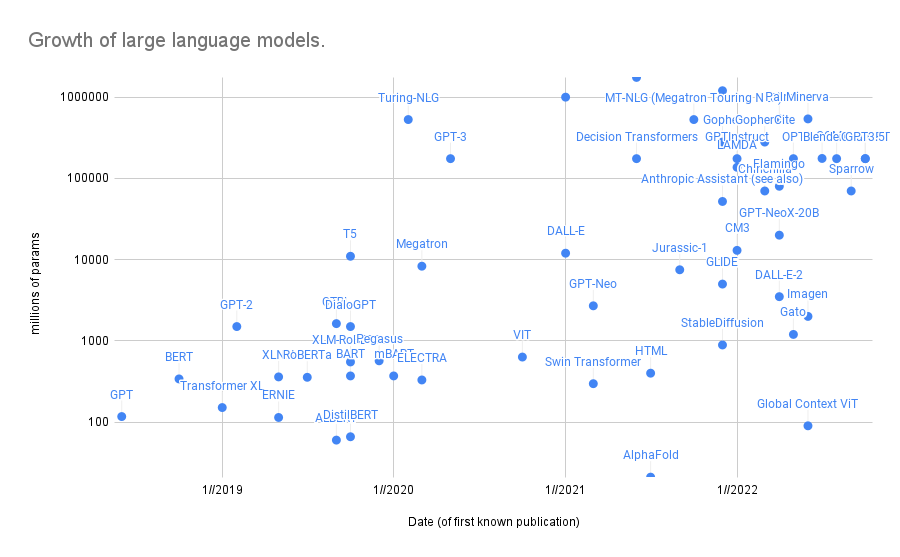

NOTE: Bigger and bigger models The transformer architecture with its self-attention mechanism, the positional encoding and its stack of encoders/decoders, was designed with a clear hypothesis in mind: scaling the volume of data that can be processed will enables larger models to be trained which will then allow to capture more subtlety of languages.

When talking about language model size, one is specifically refers to the number of parameters trained in the model, usually the internal weights and biases that are used each artificial neural unit. Another aspects to take into account is the number of tokens used during training which is proportional to the size of the training data set used.

How transformers are trained?¶

The transformer architecture and all its innovations allows to process efficiently sequential text data with very powerful weighting of the relations inside. However to train these, on still need to collect a training dataset large enough to learn enough and optimize on many tasks. The dataset creation is a very common limitation for any machine learning (ML) project since it is often a resource intensive process to 1) collect the data and 2) format it in a training set by identifying specific labels that correspond roughly to the objective of the task.

In the masked language model (MLM) approach, a portion of the input tokens are replaced with a special token ("[MASK]") and the model is trained to predict the replaced tokens based on the context provided by the other tokens in the input. Basically the labels Y associated to an input X are automatically known since it's the input that is modified to "hide" some parts. Removing the need to create labels reduces a lot the efforts associated to the creation of a training set and allows to extend to very large corpus

It was part of the proposal in the original BERT paper. Using this MLM training, the authors were able to train the BERT model on a very large corpus of unlabelled text. BERT was trained on a massive dataset of around 3.3 billion words, and it was able to achieve state-of-the-art performance on a wide range of natural language understanding tasks, such as question answering and named entity recognition, by fine-tuning on a task-specific dataset.

The idea of using masked language model training to improve the pre-training is not new, but the BERT paper proposed a new way of pre-training transformer models that was more effective than previous methods. In particular, the authors introduced the idea of training the model on both left-to-right and right-to-left context which was found to be important for understanding the language structure. This pre-training method has been found to be effective in improving the performance of the transformer model on a wide range of natural language understanding tasks, especially in situations where the amount of labeled data is limited. By pre-training on a large corpus of unlabeled data, the model can learn general language understanding features that can be adapted to specific NLP tasks later on by fine-tuning on smaller task-specific labeled dataset.

Where is the transformer architecture used?¶

LLMs are being used in a wide range of industries, as they can be fine-tuned to perform a variety of natural language processing tasks.

In the technology industry, LLMs are being used to create home assistants, such as Amazon's Alexa and Google Assistant, which can understand and respond to voice commands. These models are being used to improve the accuracy of voice recognition systems, making it easier for people to interact with computers and other devices using their voice. But they are also used in the interpretation of the commands and often in the selection of the appropriate answers or reactions.

In the field of writing and content creation, LLMs are being used to generate new text, such as articles, music, and code. These models can also be used to summarize large chunks of text, making it easier for people to quickly get the key points from a long article or report. Grammarly uses a language model to check grammar, punctuation, and style.

In the field of business, LLMs are being used to analyze and understand customer interactions and data, such as emails and chat transcripts. For example, Salesforce Einstein uses a large language model to help businesses understand customer sentiment and behavior. These models are also being used to personalize content, ads, or other information for individual users based on their interests, preferences, and behavior.

In the field of healthcare, LLMs can be used to extract structured data from unstructured clinical text, such as electronic health records, which can then be used to support research and clinical decision making.

In the field of education, LLMs can be used to create chatbots that can assist students with their coursework and answer questions about the material. These models can also be used to generate personalized study materials based on the student's level of understanding and learning style.

Overall, LLMs have the potential to be used in many different industries, as they can be fine-tuned to perform a wide range of natural language processing tasks. The possibilities are vast and new applications are being developed all the time as the technology advances.

The history of large language models (LLM)¶

The development of LLMs is a relatively recent innovation in the field of natural language processing (NLP). Here is a brief history of some of the key innovations that have led to the development of these models:

Early 2000s: The field of NLP is dominated by rule-based and statistical methods, such as hidden Markov models (HMMs) and conditional random fields (CRFs). These methods are highly effective for certain tasks but are limited in their ability to model the complex relationships between words in the input.

2010s: The field of NLP begins to shift towards the use of deep learning methods, particularly recurrent neural networks (RNNs) and their variants, such as long short-term memory (LSTM) networks. These methods are able to model the relationships between words in the input, but their performance is limited by the difficulty of training models with long-range dependencies.

2013: Google researchers proposed the use of convolutional neural networks (CNNs) and a novel method of pre-training them with unsupervised tasks, called "word2vec" in a paper "Efficient Estimation of Word Representations in Vector Space"

2017: The transformer architecture is introduced in the paper "Attention Is All You Need" by Google researchers. The transformer architecture uses self-attention mechanism, which allows the model to weigh the importance of different parts of the input regardless of their position in the sequence, and overcome the problems with the RNNs, making the training of models with long-range dependencies more feasible.

2018: Google researchers introduced BERT (Bidirectional Encoder Representations from Transformers) model which uses the transformer architecture and is pre-trained on a masked language modeling task and next sentence prediction task on large corpus of text. BERT achieved state-of-the-art results on a wide range of natural language understanding tasks.

2019-2020: The transformer-based models with pre-training such as BERT become more popular, and researchers propose models with larger capacity such as RoBERTa, ALBERT and T5, which are even more powerful than BERT, and are trained on larger dataset.

2021-2022: A distinct acceleration of research has been observed across these last years. Driven by large amount of computational power and strong engineering efforts, many organization disclosed new models. Among these, research laboratories from large private companies such as OpenAI (with GPT-3) or Google (with LaMDA and PaLM) engaged in a race for larger model in an attempt to secure their business. On the other spectrum, open research groups emerged — such as Eulether-AI (with GPT-J) or the BigScience workshop (with BLOOM) — in a attempt to counter privately funded initiatives and come back to a more open research approach.

LLMs are the result of a series of innovations in the field of NLP, starting with the use of rule-based and statistical methods in the early 2000s, moving on to the use of deep learning methods and recurrent neural networks in the 2010s. The 2020s have seen explosion of models built upon the recently introduced transformer architecture and MLM approach to pre-train these on large dataset. This made possible to train models with billions of parameters and on data set rich from billions of tokens, greatly improving the performance on many NLP tasks.

The GPT family¶

GPT (Generative Pre-trained Transformer) and BERT (Bidirectional Encoder Representations from Transformers) are both transformer-based models that have been trained using the masked language model (MLM) approach, but they have been trained on different tasks and using different architectures. Here are some key differences between the two models:

Attention mechanism: BERT uses a self-attention mechanism called "masked self-attention" where it masks some of the tokens and learns to predict the masked tokens based on the context provided by the other tokens, this allows BERT to understand the relationships between words in the input regardless of their positions. GPT uses a similar self-attention mechanism, but it is unidirectional, allowing the model to understand the sentence structure and the flow of information within a given sentence.

Training objective: BERT is trained on two objectives, masked language modeling and next sentence prediction, it is trained to predict the masked tokens based on the context provided by the other tokens in the input, and also to predict whether a sentence is the next sentence to a given one, this makes BERT more specialized in understanding the context of a sentence. GPT is trained on a unidirectional language modeling task, predicting the next word given the previous words in a sentence.

Fine-tuning and tasks: BERT is designed for a specific range of natural language understanding tasks, such as sentiment analysis, named entity recognition, and question answering, which typically involve understanding the context of a given sentence. It is then often fine-tuned on a specific NLP task and while its original architecture is used as a feature extractor. In contrast, GPT is primarily designed for the language modeling task, which involves predicting the next word in a sentence given the previous words. GPT is then fine-tuned for a wider range of tasks, including language translation, summarization, and text generation tasks, and its architecture is more suited for making predictions.

Size and capacity: The exact number of parameters in a BERT model can vary depending on the specific architecture, but the original BERT model (BERT-base) introduced in the BERT paper has approximately 110 million parameters. The other version BERT-large introduced by the same paper has approximately 340 million parameters. GPT models are generally larger than BERT models, with more layers and more parameters and have been trained on larger hardware cluster. GPT-3 has 175 billion parameters.

Pre-training data: BERT has been pre-trained on a large corpus of text that is primarily sourced from Wikipedia articles and books summing to approximately 3.3 billion words. GPT is pre-trained on a larger corpus of text that is sourced from web pages, books and other text sources. In terms of training data, GPT-3 was pre-trained on a massive dataset of around 570GB of text, sourced from various internet sources including books, articles, and websites. The text was cleaned and pre-processed in a similar way to the BERT model, by lowercasing the text, tokenizing it and then training the model for a large number of training steps. The dataset used for GPT-3 pre-training contains much more diverse set of text and it's larger than the previous versions of GPT.

The core architecture of BERT and GPT is the same (ie the transformer unit). With additional specificities for GPT, the models have been adapted to perform better in generative tasks and showed great value in such. The performance gain seems however to be more related to the scale of the GPT models (in number of parameters and size of their training set) rather than the small adaptations to the training process. This confirms the importance of the self-attention mechanism and the MLM approach for the recent LLM trend. In that manner, ML engineering played critical component in this performance. This is achieved through techniques such as careful selection and preprocessing of data to ensure high quality and quantity, fine-tuning of hyperparameters, optimization of the model for faster training, and deploying the model in an efficient and scalable way for practical use. Without the expertise of ML engineers, the performance of these models would not be as advanced as it is today.

Using GPT-Neo and BLOOM¶

GPT class models has been for a long time a closed world of research and development. There has always been a lot of debate about making large language models public without knowing the impact they might have on our society. However, in mid 2020, EleutherAI decided to launch its GPT-Neo model following a thorough risk-opportunity analysis, and assuming that several months after the release of GPT-3 no malicious effects were observed. Among the arguments pushing for the availability of these models, we find in particular the following points:

The public availability of large pre-trained language models will allow researchers to better understand the capabilities of these models and to improve their safety and security,

Most damage associated with GPT3's release was done at the moment the paper was published footnote:[Tom B. Brown & Al. "Language Models are Few-Shot Learners". https://arxiv.org/abs/2005.14165]. Assuming that transformers were able to scale, the paper showed the path to follow to build a large language model. It was then assumed that any team with enough resources would be able to build a similar model. By making the model publicly available, EleutherAI was transferring the responsibility of the model to a wider community, and thus possibly reducing the risk of misuse.

Large, unsupervised language models like GPT-Neo are trained on vast amounts of unstructured text data from the internet. These models can generate human-like text and have a wide range of applications, from natural language processing tasks to creative writing. However, it is important to note that the use of unsupervised data can result in models that perpetuate biases and misinformation present in the data they were trained on. To mitigate these risks, it is important to pay an extreme attention to the data used to train these models. The GPT-Neo family of models is trained on a dataset of 1.3 billion tokens named The Pile, which is 10 times larger than the dataset used to train GPT-3. The dataset is sourced from a variety of internet sources, including books, articles, and websites. The text is cleaned and pre-processed in a similar way to the BERT model, by lowercasing the text, tokenizing it and then training the model for a large number of training steps.

More recently, through a collaborative efoort, HuggingFace - a NLP startup - has released a new open-source family of models called BLOOM. BLOOM is based on the GPT-Neo model and is designed to make it easier for researchers and developers to train their own large language models. BLOOM is built on top of the PyTorch framework and is designed to be easy to use and extend. It is also designed to be compatible with the Hugging Face Transformers library, which is a popular library for training and deploying NLP models.

What are the limits of those models? How can we use them? What are the risks? How can we mitigate them? What are the next steps?

Applications¶

Listing possible applications of LLMs with regards to textual data or more generally natural language processing.

Sentiment Analysis: LLMs can be used to analyze text and determine the sentiment expressed in it, such as whether it is positive, negative, or neutral for product reviews.

Text Classification: They can also be used in the more general case of text classification into different categories, such as news articles, social media posts, or customer reviews, making it easy to sort and organize large amounts of text data.

Autocomplete: Another usage of LLMs is to complete a sentence or phrase that the user has started typing, based on the context and the most likely next word or phrase.

Personalization: LLMs can be used to personalize content, ads, or other information for individual users based on their interests, preferences, and behavior.

Language Translation: LLMs can be fine-tuned to translate text from one language to another, making it possible for people who speak different languages to communicate more easily.

Text Summarization: These models can also be used to summarize large chunks of text into shorter, more manageable versions. This is useful for quickly getting the key points from a long article or report.

Question Answering: Language models can be trained to answer questions by reading and understanding the text, and then providing a relevant response.

Text Generation: LLMs can be used to generate new text that is similar in style and content to a given input. This can be used for tasks such as writing articles, composing music, or even generating code.

Conversational assistant: These models can be used to create chatbots that can carry on a conversation with users in a natural and human-like way.

These are just a few examples of the many ways that large language models are being used today, and new applications are being developed all the time as the technology advances.

Prerequisites for next steps¶

Explaining what's needed for understanding the next chapters

Summary bullets

- The "Attention is all you need" paper introducing the transformers architecture is a breakthrough in the field of NLP

- Large Language Models are a new class of models that are trained on large amounts of unstructured text data from the internet

- These models can generate human-like text and have a wide range of applications, from natural language processing tasks to creative writing

- However, it is important to note that the use of unsupervised data can result in models that perpetuate biases and misinformation present in the data they were trained on